Consider \(M\) events \(A_{1}, A_{2}, \ldots, A_{M}\) defined on a probability space. In this section we shall develop formulas for the probabilities of various events, defined in terms of the events \(A_{1}, \ldots, A_{M}\) , especially for \(m=\) \(0,1, \ldots, M\) , that (i) exactly \(m\) of them, (ii) at least \(m\) of them, (iii) no more than \(m\) of them will occur. With the aid of these formulas, a variety of questions connected with sampling and occupancy problems may be answered.

Theorem. Let \(A_{1}, A_{2}, \ldots, A_{M I}\) be \(M\) events defined on a probability space. Let the quantities \(S_{0}, S_{1}, \ldots, S_{M}\) be defined as follows: \begin{align} & S_{0}=1 \\ & S_{1}=\sum_{k=1}^{M} P\left[A_{k}\right] \\ & S_{2}=\sum_{k_{1}=1}^{M} \sum_{k_{2}=k_{1}+1}^{M} P\left[A_{k_{1}} A_{k_{2}}\right] \tag{6.1} \\ & \cdot \\ & \cdot \\ & \cdot \\ & S_{r}=\sum_{k_{1}=1}^{M} \sum_{k_{2}=k_{1}+1}^{M} \ldots \sum_{k_{r}=k_{r-1}+1}^{M} P\left[A_{k_{1}} A_{k_{2}} \ldots A_{k_{r}}\right] \\ & \cdot \\ & \cdot \\ & \cdot \\ & S_{M}=P\left[A_{1} A_{2} \cdots A_{M}\right] \end{align}

The definition of \(S_{r}\) is usually written \[S_{r}=\sum_{\left\{k_{1}, \ldots, k_{r}\right\}} P\left[A_{k_{1}} A_{k_{2}} \cdots A_{k_{r}}\right] \tag{$6.1^{\prime}$}\] in which the summation in \(\left(6.1^{\prime}\right)\) is over the \(\left(\begin{array}{c}M \\ r\end{array}\right)\) possible subsets \(\left\{k_{1}, \ldots, k_{r}\right\}\) of size \(r\) of the set \(\{1,2, \ldots, M\}\) .

Then, for any integer \(m=0,1, \ldots, M\) , the probability of the event \(B_{m}\) that exactly \(m\) of the \(M\) events \(A_{1}, \ldots, A_{M}\) will occur simultaneously is given by \begin{align} P\left[B_{m}\right] & =\sum_{r=n}^{M}(-1)^{r-m}\left(\begin{array}{c} r \\ m \end{array}\right) S_{r} \tag{6.2} \\ & =S_{m}-\left(\begin{array}{c} m+1 \\ m \end{array}\right) S_{m+1}+\left(\begin{array}{c} m+2 \\ m \end{array}\right) S_{m+2}-\cdots \pm\left(\begin{array}{c} M \\ m \end{array}\right) S_{M}. \end{align}

In particular, for \(m=0\) , \[P\left[B_{0}\right]=1-S_{1}+S_{2}-S_{3}+S_{4}-\cdots \pm\left(S_{M}\right). \tag{6.3}\]

The probability that at least \(m\) of the \(M\) events \(A_{1}, \ldots, A_{M}\) will occur is given by (for \(m \geq 1\) ) \[P\left[B_{m}\right]+P\left[B_{m+1}\right]+\cdots+P\left[B_{M}\right]=\sum_{r=m}^{M}(-1)^{r-m}\left(\begin{array}{c} r-1 \tag{6.4} \\ m-1 \end{array}\right) S_{r}.\]

Before giving the proof of this theorem, we shall discuss various applications of it.

Example 6A . The matching problem (case of sampling without replacement) . Suppose that we have \(M\) urns, numbered 1 to \(M\) , and \(M\) balls, numbered 1 to \(M\) . Let the balls be inserted randomly in the urns, with one ball in each urn. If a ball is put into the urn bearing the same number as the ball, a match is said to have occurred. Show that the probability that (i) at least one match will occur is

\[1-\frac{1}{2 !}+\frac{1}{3 !}-\cdots \pm \frac{1}{M!} \doteq 1-e^{-1}=0.63212, \tag{6.5}\]

(ii) exactly \(m\) matches will occur, for \(m=0,1, \ldots, M\) , is

\begin{align} \frac{1}{m !}\left\{1-1+\frac{1}{2 !}-\frac{1}{3 !}+\cdots \pm \frac{1}{(M-m) !}\right\}=\frac{1}{m !} \sum_{k=0}^{M-m}(-1)^{k} \frac{1}{k !} \tag{6.6}\\ \doteq \frac{1}{m !} e^{-1} \quad \text { for } M-m \text { large }. \end{align}

The matching problem may be formulated in a variety of ways. First variation : if \(M\) married gentlemen and their wives (in a monogamous society) draw lots for a dance in such a way that each gentleman is equally likely to dance with any of the \(M\) wives, what is the probability that exactly \(m\) gentlemen will dance with their own wives? Second variation : if \(M\) soldiers who sleep in the same barracks arrive home one evening so drunk that each soldier chooses at random a bed in which to sleep, what is the probability that exactly \(m\) soldiers will sleep in their own beds? Third variation : if \(M\) letters and \(M\) corresponding envelopes are typed by a tipsy typist and the letters are put into the envelopes in such a way that each envelope contains just one letter that is equally likely to be any one of the \(M\) letters, what is the probability that exactly \(m\) letters will be inserted into their corresponding envelopes? Fourth variation : If two similar decks of \(M\) cards (numbered 1 to \(M\) ) are shuffled and dealt simultaneously, one card from each deck at a time, what is the probability that on just \(m\) occasions the two cards dealt will bear the same number?

There is a considerable literature on the matching problem that has particularly interested psychologists. The reader may consult papers by D. E. Barton, Journal of the Royal Statistical Society , Vol. 20 (1958), pp. 73–92, and P. E. Vernon, Psychological Bulletin , Vol. 33 (1936), pp. 149–77, which give many references. Other references may be found in an editorial note in the American Mathematical Monthly , Vol. 53 (1946), p. 107. The matching problem was stated and solved by the earliest writers on probability theory. It may be of value to reproduce here the statement of the matching problem given by De Moivre ( Doctrine of Chances , 1714, Problem 35): “Any number of letters a, b, c, d, e, f, etc., all of them different, being taken promiscuously as it happens; to find the Probability that some of them shall be found in their places according to the rank they obtain in the alphabet and that others of them shall at the same time be displaced”.

Solution

To describe the distribution of the balls among the urns, write an \(n\) -tuple \(\left(z_{1}, z_{2}, \ldots, z_{n}\right)\) whose \(j\) th component \(z_{j}\) represents the number of the ball inserted in the \(j\) th urn. For \(k=1,2, \ldots, M\) the event \(A_{k}\) that a match will occur in the \(k\) th urn may be written \(A_{k}=\left\{\left(z_{1}, z_{2}, \ldots, z_{n}\right)\right. : \left.z_{k}=k\right\}\) . It is clear that for any integer \(r=1,2, \ldots, M\) and any \(r\) unequal integers \(k_{1}, k_{2}, \ldots, k_{r}, 1\) to \(M\) , \[P\left[A_{k_{1}} A_{k_{2}} \ldots A_{k_{r}}\right]=\frac{(M-r) !}{M !}. \tag{6.7}\]

It then follows that the sum \(S_{r}\) , defined by (6.1) , is given by \[S_{r}=\left(\begin{array}{c} M \tag{6.8} \\ r \end{array}\right) \frac{(M-r) !}{M !}=\frac{1}{r!}.\]

Equations (6.5) and (6.6) now follow immediately from (6.8) , (6.3) , and (6.2) .

Example 6B . Coupon collecting (case of sampling with replacement) . Suppose that a manufacturer gives away in packages of his product certain items (which we take to be coupons, each bearing one of the integers 1 to \(M\) ) in such a way that each of the \(M\) items available is equally likely to be found in any package purchased. If \(n\) packages are bought, show that the probability that exactly \(m\) of the \(M\) integers, 1 to \(M\) , will not be obtained (or, equivalently, that exactly \(M-m\) of the integers, 1 to \(M\) , will be obtained) is equal to \[\left(\begin{array}{l} M \tag{6.9} \\ m \end{array}\right) \frac{\Delta^{M-m}\left(0^{n}\right)}{M^{n}},\] where we define, for any integer \(n\) , and \(r=0,1, \ldots, n\) , \[\Delta^{r}\left(0^{n}\right)=\sum_{k=0}^{r}(-1)^{k}\left(\begin{array}{l} r \tag{6.10} \\ k \end{array}\right)(r-k)^{n}.\]

The symbol \(\Delta\) is used with the meaning assigned to it in the calculus of finite differences as an operator defined by \(\Delta f(x)=f(x+1)-f(x)\) . We write \(\Delta^{r}\left(0^{n}\right)\) to mean the value at \(x=0\) of \(\Delta^{r}\left(x^{n}\right)\) .

A table of \(\Delta^{r}\left(0^{n}\right) / r\) ! for \(n=2(1) 25\) and \(r=2(1) n\) is to be found in Statistical Tables for Agricultural, Biological , and Medical Research (1953), Table XXII.

The problem of coupon collecting has many variations and practical applications. First variation (the occupancy problem): if \(n\) distinguishable balls are distributed among \(M\) urns, numbered 1 to \(M\) , what is the probability that there will be exactly \(m\) urns in which no ball was placed (that is, exactly \(m\) urns remain empty after the \(n\) balls have been distributed)? Second variation (measuring the intensity of cosmic radiation): if \(M\) counters are exposed to a cosmic ray shower and are hit by \(n\) rays, what is the probability that precisely \(M-m\) counters will go off? Third variation (genetics): if each mouse in a litter of \(n\) mice can be classified as belonging to any one of \(M\) genotypes, what is the probability that \(M-m\) genotypes will be represented among the \(n\) mice?

Solution

To describe the coupons found in the \(n\) packages purchased, we write an \(n\) -tuple \(\left(z_{1}, z_{2}, \ldots, z_{n}\right)\) , whose \(j\) th component \(z_{j}\) represents the number of the coupon found in the \(j\) th package purchased. We now define events \(A_{1}, A_{2}, \ldots, A_{M r}\) . For \(k=1,2, \ldots, M, A_{k}\) is the event that the number \(k\) will not appear in the sample; in symbols,

\[A_{k}=\left\{\left(z_{1}, z_{2}, \ldots, z_{n}\right): \text { for } j=1,2, \ldots, n, z_{j} \neq k\right\}. \tag{6.11}\]

It is easy to obtain the probability of the intersection of any number of the events \(A_{1}, \ldots, A_{M}\) . We have

\begin{align} & P\left[A_{k}\right]=\left(\frac{M-1}{M}\right)^{n}=\left(1-\frac{1}{M}\right)^{n}, \quad k=1, \ldots, M, \\[1mm] & P\left[A_{k_{1}} A_{k_{2}}\right]=\left(1-\frac{2}{M}\right)^{n}, \quad k_{1}=1, \ldots, n, \quad k_{2}=k_{1}+1, \ldots, n, \\[1mm] & P\left[A_{k_{1}} A_{k_{2}} \cdots A_{k_{r}}\right]=\left(1-\frac{r}{M}\right)^{n}, \quad k_{1}=1, \ldots, n, \quad k_{2}=k_{1}+1, \ldots, n, \ldots, \\[1mm] & \quad\quad\quad\quad k_{r}=k_{r-1}+1, \ldots, n. \end{align}

The quantities \(S_{r}\) , defined by (6.1) , are then given by

\[S_{r}=\left(\begin{array}{c} M \tag{6.13} \\ r \end{array}\right)\left(1-\frac{r}{M}\right)^{n}, \quad r=0,1, \ldots, M.\]

Let \(B_{m}\) be the event that exactly \(m\) of the integers 1 to \(M\) will not be found in the sample. Clearly \(B_{m}\) is the event that exactly \(m\) of the events \(A_{1}\) , \(A_{2}, \ldots, A_{M}\) will occur. By (6.2) and (6.13) ,

\begin{align} P\left[B_{m}\right] & =\sum_{r=m}^{M}(-1)^{r-m}\left(\begin{array}{c} r \\ m \end{array}\right)\left(\begin{array}{c} M \\ r \end{array}\right)\left(1-\frac{r}{M}\right)^{n} \tag{6.14} \\ & =\left(\begin{array}{c} M \\ m \end{array}\right) \sum_{k=0}^{M-m}(-1)^{k}\left(\begin{array}{c} M-m \\ k \end{array}\right)\left(1-\frac{m+k}{M}\right)^{n}, \end{align}

which coincides with (6.9) .

Other applications of the theorem stated at the beginning of this section may be found in a paper by J. O. Irwin, “A Unified Derivation of Some Well-Known Frequency Distributions of Interest in Biometry and Statistics”, Journal of the Royal Statistical Society , Series A, Vol. 118 (1955), pp. 389–404 (including discussion).

The remainder of this section 1 is concerned with the proof of the theorem stated at the beginning of the section. Our proof is based on the method of indicator functions and is the work of \(\mathrm{M}\) . Loève. Our proof has the advantage of being constructive in the sense that it is not merely a verification that (6.2) is correct but rather obtains (6.2) from first principles.

The method of indicator functions proceeds by interpreting operations on events in terms of arithmetic operations. Given an event \(A\) , on a sample description space \(S\) , we define its indicator function, denoted by \(I(A)\) , as a function defined on \(S\) , with value at any description \(s\) , denoted by \(I(A ; s)\) , equal to 1 or 0, depending on whether the description \(s\) does or does not belong to \(A\) .

The two basic properties of indicator functions, which enable us to operate with them, are the following.

First, a product of indicator functions can always be replaced by a single indicator function; more precisely, for any events \(A_{1}, A_{2}, \ldots, A_{n}\) , \[I\left(A_{1}\right) I\left(A_{2}\right) \cdots I\left(A_{n}\right)=I\left(A_{1} A_{2} \cdots A_{n}\right), \tag{6.15}\] so that the product of the indicator functions of the sets \(A_{1}, A_{2}, \ldots, A_{n}\) is equal to the indicator function of the intersection \(A_{1}, A_{2}, \ldots, A_{n}\) . Equation (6.15) is an equation involving functions; strictly speaking, it is a brief method of expressing the following family of equations: for every description \(s\) \[I\left(A_{1} ; s\right) I\left(A_{2} ; s\right) \cdots I\left(A_{n} ; s\right)=I\left(A_{1} A_{2} \cdots A_{n} ; s\right).\]

To prove (6.15) , one need note only that \(I\left(A_{1} A_{2} \ldots A_{n} ; s\right)=0\) if and only if \(s\) does not belong to \(A_{1} A_{2} \ldots A_{n}\) . This is so if and only if, for some \(j=1, \ldots, n, s\) does not belong to \(A_{j}\) , which is equivalent to, for some \(j=1, \ldots, n, I\left(A_{j} ; s\right)=0\) , which is equivalent to the product \(I\left(A_{1} ; s\right) \cdots\) \(I\left(A_{n} ; s\right)=0\) .

Second, a sum of indicator functions can in certain circumstances be replaced by a single indicator function; more precisely, if the events \(A_{1}\) , \(A_{2}, \ldots, A_{n}\) are mutually exclusive, then \[I\left(A_{1}\right)+I\left(A_{2}\right)+\cdots+I\left(A_{n}\right)=I\left(A_{1} \cup A_{2} \cup \cdots \cup A_{n}\right). \tag{6.16}\]

The proof of (6.16) is left to the reader. One case, in which \(n=2\) and \(A_{2}=A_{1}^{c}\) , is of especial importance. Then \(A_{1} \cup A_{2}=S\) , and \(I\left(A_{1} \cup A_{2}\right)\) is identically equal to 1. Consequently, we have, for any event \(A\) , \[I(A)+I\left(A^{c}\right)=1, \quad I\left(A^{c}\right)=1-I(A). \tag{6.17}\] From (6.15) to (6.17) we may derive expressions for the indicator functions of various events. For example, let \(A\) and \(B\) be any two events. Then \begin{align} I(A \cup B) & =1-I\left(A^{c} B^{c}\right) \tag{6.18} \\ & =1-I\left(A^{c}\right) I\left(B^{c}\right) \\ & =1-(1-I(A))(1-I(B)) \\ & =I(A)+I(B)-I(A B). \end{align}

Our ability to write expressions in the manner of (6.18) for the indicator functions of compound events, in terms of the indicator functions of the events of which they are composed, derives its importance from the following fact: an equation involving only sums and differences (but not products) of indicator functions leads immediately to a corresponding equation involving probabilities; this relation is obtained by replacing \(I(\cdot)\) by \(P[\cdot]\) . For example, if one makes this replacement in (6.18) , one obtains the well-known formula \(P[A \cup B]=P[A]+P[B]-P[A B]\) .

The principle just enunciated is a special case of the additivity property of the operation of taking the expected value of a function defined on a probability space. This is discussed in Chapter 8 , but we shall sketch a proof here of the principle stated. We prove a somewhat more general assertion. Let \(f(\cdot)\) be a function defined on a sample description space. Suppose that the possible values of \(f\) are integers from \(-N(f)\) to \(N(f)\) for some integer \(N(f)\) that will depend on \(f(\cdot)\) . We may then represent \(f\left(\cdot\right)\) as a linear combination of indicator functions: \[f(\cdot)=\sum_{k=-N(f)}^{N(f)} k I\left[D_{k}(f)\right], \tag{6.19}\] in which \(D_{k}(f)=\{s: f(s)=k\}\) is the set of descriptions at which \(f(\cdot)\) takes the value \(k\) . Define the expected value of \(f(\cdot)\) , denoted by \(E[f(\cdot)]\) : \[E[f(\cdot)]=\sum_{k=-N(f)}^{N(f)} k P\left[D_{k}(f)\right]. \tag{6.20}\]

In words, \(E[f(\cdot)]\) is equal to the sum, over all possible values \(k\) of the function \(f(\cdot)\) , of the product of the value \(k\) and the probability that \(f(\cdot)\) will assume the value \(k\) . In particular, if \(f(\cdot)\) is an indicator function, so that \(f(\cdot)=I(A)\) for some set \(A\) , then \(E[f(\cdot)]=P[A]\) . Consider now another function \(g(\cdot)\) , which may be written \[g(\cdot)=\sum_{j=-N(g)}^{N(g)} j I\left[D_{j}(g)\right]. \tag{6.21}\] We now prove the basic additivity theorem that \[E[f(\cdot)+g(\cdot)]=E[f(\cdot)]+E[g(\cdot)]. \tag{6.22}\]

The sum \(f(\cdot)+g(\cdot)\) of the two functions is a function whose possible values are numbers, \(-N\) to \(N\) , in which \(N=N(f)+N(g)\) . However, we may represent the function \(f(\cdot)+g(\cdot)\) in terms of the indicator functions \(I\left[D_{k}(f)\right]\) and \(l\left[D_{j}(g)\right]\) : \[f(\cdot)+g(\cdot)=\sum_{k=-N(f)}^{N(f)} \sum_{j=-N(g)}^{N(g)}(k+j) I\left[D_{k}(f) D_{j}(g)\right].\] Therefore, \begin{align} E[f(\cdot)+g(\cdot)]= & \sum_{k=-N(f)}^{N(f)} \sum_{j=-N(g)}^{N(g)}(k+j) P\left[D_{k}(f) D_{j}(g)\right] \\ = & \sum_{k=-N(f)}^{N(f)} k \sum_{j=-N(g)}^{N(g)} P\left[D_{k}(f) D_{j}(g)\right] \\ & \quad+\sum_{j=-N(g)}^{N(g)} j \sum_{k=-N(f)}^{N(f)} P\left[D_{k}(f) D_{j}(g)\right] \\ & =\sum_{k=-N(f)}^{N(f)} k P\left[D_{k}(f)\right]+\sum_{j=-N(g)}^{N(g)} j P\left[D_{j}(g)\right] \\ & =E[f(\cdot)]+E[g(\cdot)], \end{align} and the proof of (6.22) is complete. By mathematical induction we determine from (6.22) that, for any \(n\) functions, \(f_{1}(\cdot), f_{2}(\cdot), \ldots, f_{n}(\cdot)\) ,

\[E\left[f_{1}(\cdot)+\cdots+f_{n}(\cdot)\right]=E\left[f_{1}(\cdot)\right]+\cdots+E\left[f_{n}(\cdot)\right]. \tag{6.23}\]

Finally, from (6.23) , and the fact that \(E[I(A)]=P[A]\) , we obtain the principle we set out to prove; namely, that, for any events \(A, A_{1}, \ldots, A_{n}\) , if \[I(A)=c_{1} I\left(A_{1}\right)+c_{2} I\left(A_{2}\right)+\cdots+c_{n} I\left(A_{n}\right), \tag{6.24}\] in which the \(c_{i}\) are either +1 or -1, then \[P[A]=c_{1} P\left[A_{1}\right]+c_{2} P\left[A_{2}\right]+\cdots+c_{n} P\left[A_{n}\right]. \tag{6.25}\]

We now apply the foregoing considerations to derive (6.2) . The event \(B_{m}\) , that exactly \(m\) of the \(M\) events \(A_{1}, A_{2}, \ldots, A_{M}\) occur, may be expressed as the union, over all subsets \(J_{m}=\left\{i_{1}, \ldots, i_{m}\right\}\) of size \(m\) of the integers \(\{1,2, \ldots, M\}\) , of the events \(A_{i_{1}} \ldots A_{i_{m}} A_{i_{m+1}}^{c} \ldots A_{i_{M I}}^{c}\) ; there are \(\left(\begin{array}{l}M \\ m\end{array}\right)\) such events, and they are mutually exclusive. Consequently, by (6.15) - (6.17) , \[I\left(B_{m}\right)=\sum_{J_{m}} I\left(A_{i_{1}}\right) \cdots I\left(A_{i_{m}}\right)\left[1-I\left(A_{i_{m+1}}\right)\right] \cdots\left[1-I\left(A_{i_{M}}\right)\right]. \tag{6.26}\] Now each term in (6.26) may be written \begin{align} I\left(A_{i_{1}}\right) \cdots I\left(A_{i_{m}}\right) & \left\{1-H_{1}\left(J_{m}\right)+\cdots+(-1)^{k} H_{k}\left(J_{m}\right)\right. \tag{6.27} \\[1mm] & \left.+\cdots \pm H_{M-m}\left(J_{m}\right)\right\}, \end{align} where we define \(H_{k}\left(J_{m}\right)=\Sigma I\left(A_{j_{1}} \ldots A_{j_{k}}\right)\) , in which the summation is over all subsets of size \(k\) of the set of integers \(\left\{i_{m+1}, \ldots, i_{M}\right\}\) . Now because of symmetry and (6.15) , one sees that \[\sum_{J_{m}} I\left(A_{i_{2}}\right) \cdots I\left(A_{i_{m}}\right) H_{k}\left(J_{m}\right)=\left(\begin{array}{c} m+k \tag{6.28} \\ m \end{array}\right) H_{k+m}.\]

\(H_{k+m}=\Sigma I\left(A_{j_{1}} \ldots A_{j_{k+m}}\right)\) , in which the summation is over all subsets of size \(k+m\) of the set of integers \(\{1,2, \ldots, M\}\) . To see (6.28) , note that there are \(\left(\begin{array}{l}M \\ m\end{array}\right)\) terms in \(J_{m},\left(\begin{array}{c}M-m \\ k\end{array}\right)\) terms in \(H_{k}\left(J_{m}\right)\) , and \(\left(\begin{array}{c}M \\ m+k\end{array}\right)\) terms in \(H_{k+m}\) and use the fact that \(\left(\begin{array}{c}M \\ m\end{array}\right)\left(\begin{array}{c}M-m \\ k\end{array}\right) \div\left(\begin{array}{c}M \\ m+k\end{array}\right)=\) \(\left(\begin{array}{c}m+k \\ m\end{array}\right)\) .

Finally, from (6.24) to (6.28) and (6.1) we obtain \[P\left[B_{m}\right]=\sum_{k=0}^{M-m}(-1)^{k}\left(\begin{array}{c} m+k \tag{6.29} \\ m \end{array}\right) S_{k+m},\] which is the same as (6.2) . Equation (6.4) follows immediately from (6.2) by induction.

Theoretical Exercises

6.1. Matching problem (case of sampling without replacement) . Show that for \(j=1, \ldots, M\) the conditional probability of a match in the \(j\) th urn, given that there are \(m\) matches, is \(m / M\) . Show, for any 2 unequal integers \(j\) and \(k, 1\) to \(M\) , that the conditional probability that the ball number \(j\) was placed in urn number \(k\) , given that there are \(m\) matches, is equal to \((M-m)(M-m-1) / M(M-1)\) .

6.2. Matching (case of sampling with replacement) . Consider the matching problem under the assumption that, for \(j=1,2, \ldots, M\) , the ball inserted in the \(j\) th urn was chosen randomly from all the \(M\) balls available (and then made available as a candidate for insertion in the \((j+1)\) st urn). Show that the probability of at least 1 match is \(1-[1-(1 / M)]^{M} \doteq 1-e^{-1}=\) 0.63212. Find the probability of exactly \(m\) matches.

6.3. A man addresses \(n\) envelopes and writes \(n\) checks in payment of \(n\) bills.

(i) If the \(n\) bills are placed at random in the \(n\) envelopes, show that the probability that each bill will be placed in the wrong envelope is \[\sum_{k=2}^{n}(-1)^{k}(1 / k !).\]

(ii) If the \(n\) bills and \(n\) checks are placed at random in the \(n\) envelopes, 1 in each envelope, show that the probability that in no instance will the enclosures be completely correct is \(\sum_{k=0}^{n}(-1)^{k}(n-k) ! /(n ! k !)\) .

(iii) In part (ii) the probability that each bill and each check will be in a wrong envelope is equal to the square of the answer to part (i).

6.4. A sampling (or coupon collecting) problem . Consider an urn that contains \(r M\) balls, for given integers \(r\) and \(M\) . Suppose that for each integer \(j\) , 1 to \(M\) , exactly \(r\) balls bear the integer \(j\) . Find the probability that in a sample of size \(n\) (in which \(n \geq M\) ), drawn without replacement from the urn, exactly \(m\) of the integers 1 to \(M\) will be missing.

Hint : \[S_{j}=\left(\begin{array}{c} M \\ j \end{array}\right)[r(M-j)]_{n} /(r M)_{n}\]

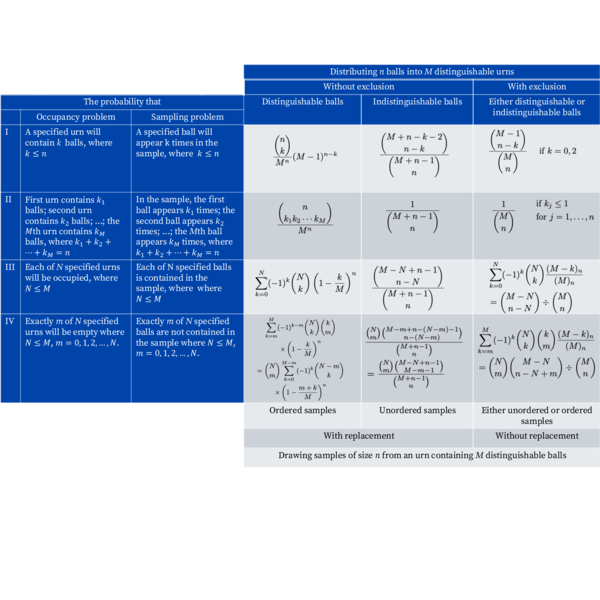

6.5. Verify the formulas in row I of Table 6A .

6.6. Verify the formulas in row II of Table 6A.

6.7. Verify the formulas in row III of Table 6A.

6.8. Verify the formulas in row IV of Table 6A.

Exercises

6.1. If 10 indistinguishable balls are distributed among 7 urns in such a way that all arrangements are equally likely, what is the probability that (i) a specified urn will contain 3 balls, (ii) all urns will be occupied, (iii) exactly 5 urns will be empty?

Answer

(i) \(\left(\begin{array}{c}12 \\ 7\end{array}\right) /\left(\begin{array}{l}16 \\ 10\end{array}\right)\) ; (ii) \(\left(\begin{array}{l}9 \\ 3\end{array}\right) /\left(\begin{array}{l}16 \\ 10\end{array}\right)\) ; (iii) \(9\left(\begin{array}{l}7 \\ 5\end{array}\right) /\left(\begin{array}{l}16 \\ 10\end{array}\right)\) .

6.2. If 7 indistinguishable balls are distributed among 10 urns in such a way that not more than 1 ball may be put in any urn and all such arrangements are equally likely, what is the probability that (i) a specified urn will contain 1 ball (ii) exactly 3 of the first 4 urns will be empty?

6.3. If 10 distinguishable balls are distributed among 4 urns in such a way that all arrangements are equally likely, what is the probability that (i) a specified urn will contain 6 balls, (ii) the first urn will contain 4 balls, the second urn will contain 3 balls, the third urn will contain 2 balls, and the fourth urn will contain 1 ball, (iii) all urns will be occupied?

Answer

(i) \(\left(\begin{array}{c}10 \\ 6\end{array}\right) / 4^{10} ;\) (ii) \(\left(\begin{array}{c}10 \\ 4321\end{array}\right) / 4^{10}\) ; (iii) \(\sum_{k=0}^{4}(-1)^{k}\left(\begin{array}{l}4 \\ k\end{array}\right)\left(1-\frac{k}{4}\right)^{10}\) .

6.4. Consider 5 families, each consisting of 4 persons. If it is reported that 6 of the 20 individuals in these families have a contagious disease, what is the probability that (i) exactly 2, (ii) at least 3 of the families will be quarantined?

6.5. Write out (6.2) and (6.4) for (i) \(M=2\) and \(m=0,1,2\) , (ii) \(M=3\) and \(m=0,1,2,3\) , (iii) \(M=4\) and \(m=0,1,2,3,4\) .

Answer

(i) \(P\left[B_{0}\right]=1-S_{1}+S_{2}, P\left[B_{1}\right]=S_{1}-2 S_{2}, P\left[B_{2}\right]=S_{2}\) .

(ii) \(P\left[B_{0}\right]=1-S_{1}+S_{2}-S_{3}, P\left[B_{1}\right]=S_{1}-2 S_{2}+3 S_{3}, P\left[B_{2}\right]=S_{2}-3 S_{3}\) , \(P\left[B_{3}\right]=S_{3}\) .

(iii) \(P\left[B_{0}\right]=1-S_{1}+S_{2}-S_{3}+S_{4}, P\left[B_{1}\right]=S_{1}-2 S_{2}+3 S_{3}-4 S_{4}\) ,

\(P\left[B_{2}\right]=S_{2}-3 S_{3}+6 S_{4}, P\left[B_{3}\right]=S_{3}-4 S_{4}, P\left[B_{4}\right]=S_{4}. P\) [at least 1 \(]=\)

\(S_{1}-S_{2}+S_{3}-\cdots \pm S_{M}. P[\) at least 2 \(]=S_{2}-2 S_{3}+3 S_{4}-\cdots \pm M S_{M}\) .

\(P\) [at least 3] \(=S_{3}-3 S_{4}+\cdots \pm \frac{1}{2} M(M-1) S_{M}. \quad P\) [at least \(M\) ] \(=S_{M}\) .

- The remainder of this section may be omitted in a first reading of the book. ↩︎