Taylor Approximation

Table of Contents

- 17.1 INTRODUCTION

- 17.2 LECTURE

- 17.2.1 Unveiling the Multidimensional Taylor Formula

- 17.2.2 Tensors and the Multidimensional Taylor Series Representation

- 17.2.3 The Local Approximation Through Linearization

- 17.2.4 Getting Even Closer: Quadratic Approximations with Hessians

- 17.2.5 The Tangent Plane to a Surface

- 17.2.6 How Gradients Help Find Tangent Planes to Surfaces

- 17.3 EXAMPLES

- EXERCISES

17.1 INTRODUCTION

17.1.1 How Linearity Helped Feynman Conquer the Cube Root

According to legend1, Richard Feynman got into the challenge to compute the cube root of \(1729.03\) against an Abacus computation. By using linear approximation and a bit of luck, he could get \(12.002384\) using paper and pencil. The actual cube root is \(12.002383785691718123057\). How did Feynman do it? The secret is in linear approximation. This means that we approximate a function like \(f(x)=x^{1 / 3}\) with a linear function. The same can be done with functions of several variables. The linear approximation if of the form \(L(x)=f(a)+f^{\prime}(a)(x-a)\).

17.1.2 Beyond Linear Approximations

One can also do higher order approximations. The function \(f(x)=e^{x}\) for example has the linear approximation \(L(x)=1+x\) at \(a=0\) and the quadratic approximation \(Q(x)=1+x+x^{2} / 2\) at \(a=0\). To get the quadratic term, we just need to make sure that the first and second derivative at \(x=a\) agree. This gives the formula \[Q(x)=f(a)+f^{\prime}(a)(x-a)+f^{\prime \prime}(a)(x-a)^{2} / 2.\] Indeed, you can check that \(f(x)\) and \(Q(x)\) have the same first derivatives and the same second derivatives at \(x=a\). A degree \(n\) approximation is then the polynomial \[P_{n}(x)=\sum_{k=0}^{n} f^{(k)}(a) \frac{(x-a)^{k}}{k !}.\] For the function \(e^{x}\) for example, we have the \(m\)’th order approximation \[e^{x}=1+x+x^{2} / 2 !+x^{3} / 3 !+\cdots+x^{n} / n !.\]

17.1.3 Multivariable Approximations

The same can be done in higher dimensions. Everything is the same. We just have to use the derivative \(d f\) rather than the usual derivative \(f^{\prime}\). We look here only at linear and quadratic approximation of functions \(\mathbb{R}^{n} \rightarrow \mathbb{R}\) The linear approximation is then \[L(x)=f(a)+\nabla f(a)(x-a)\] where \[\nabla f(a)=d f(a)=\big[f_{x_{1}}(a), \cdots, f_{x_{n}}(a)\big]\] is the Jacobian matrix, which ii a row vector. Now, since we can see \(d f(x): \mathbb{R}^{n} \rightarrow \mathbb{R}^{n}\) the second derivative is a matrix \(d^{2} f(x)=H(x)\). It is called the Hessian. It encodes all the second derivatives \(H_{i j}(x)=\) \(f_{x_{i} x_{j}}\).

17.2 LECTURE

17.2.1 Unveiling the Multidimensional Taylor Formula

Given a function \(f: \mathbb{R}^{m} \rightarrow \mathbb{R}^{n}\), its derivative \(d f(x)\) is the Jacobian matrix. For every \(x \in \mathbb{R}^{m}\), we can use the matrix \(d f(x)\) and a vector \(v \in \mathbb{R}^{m}\) to get \(D_{v} f(x)=\) \(d f(x) v \in \mathbb{R}^{m}\). For fixed \(v\), this defines a map \(x \in \mathbb{R}^{m} \rightarrow d f(x) v \in \mathbb{R}^{n}\), like the original \(f\). Because \(D_{v}\) is a map on \[\mathcal{X}=\left\{\text{all functions from }\mathbb{R}^{m} \rightarrow \mathbb{R}^{n}\right\},\] one calls it an operator. The Taylor formula \(f(x+t)=e^{D t} f(x)\) holds in arbitrary dimensions:

Theorem 1. \(\displaystyle f(x+t v)=e^{D_{v} t} f=f(x)+\dfrac{D_{v} t f(x)}{1 !}+\dfrac{D_{v}^{2} t^{2} f(x)}{2 !}+\cdots\)

Proof. It is the single variable Taylor on the line \(x+t v\). The directional derivative \(D_{v} f\) is there the usual derivative as \[\lim_{t \rightarrow 0}[f(x+t v)-f(x)] / t=D_{v} f(x).\] Technically, we need the sum to converge as well: like functions built from polynomials, \(\sin\), \(\cos\), \(\exp\). ◻

17.2.2 Tensors and the Multidimensional Taylor Series Representation

The Taylor formula can be written down using successive derivatives \(d f\), \(d^{2} f\), \(d^{3} f\) also, which are then called tensors. In the scalar case \(n=1\), the first derivative \(d f(x)\) leads to the gradient \(\nabla f(x)\), the second derivative \(d^{2} f(x)\) to the Hessian matrix \(H(x)\) which is a bilinear form acting on pairs of vectors. The third derivative \(d^{3} f(x)\) then acts on triples of vectors etc. One can still write as in one dimension

Theorem 2. \(f(x)=f(x_{0})+f^{\prime}(x_{0})(x-x_{0})+f^{\prime \prime}(x_{0}) \frac{(x-x_{0})^{2}}{2 !}+\cdots\)

if we write \(f^{(k)}=d^{k} f\). For a polynomial, this just means that we first write down the constant, then all linear terms then all quadratic terms, then all cubic terms etc.

17.2.3 The Local Approximation Through Linearization

Assume \(f: \mathbb{R}^{m} \rightarrow \mathbb{R}\) and stop the Taylor series after the first step. We get \[L(x_{0}+v)=f(x_{0})+\nabla f(x_{0}) \cdot v.\] It is custom to write this with \(x=x_{0}+v\), \(v=x-x_{0}\) as \[L(x)=f(x_{0})+\nabla f(x_{0}) \cdot(x-x_{0})\] This function is called the linearization of \(f\). The kernel of \(L-f\left(x_{0}\right)\) is a linear manifold approximating the surface \[\left\{x \mid f(x)-f\left(x_{0}\right)=0\right\}.\] If \(f: \mathbb{R}^{m} \rightarrow \mathbb{R}^{n}\), then the just said can be applied to every component \(f_{i}\) of \(f\), with \(1 \leq i \leq n\). One can not stress enough the importance of this linearization.2

17.2.4 Getting Even Closer: Quadratic Approximations with Hessians

If we stop the Taylor series after two steps, we get the function \[Q(x+v)=f(x)+d f(x) \cdot v+v \cdot d^{2} f(x) \cdot v / 2.\] The matrix \(H(x)=d^{2} f(x)\) is called the Hessian matrix at the point \(x\). It is also here custom to eliminate \(v\) by writing \(x=x_{0}+v\). \[Q(x)=f(x_{0})+\nabla f(x_{0}) \cdot(x-x_{0})+(x-x_{0}) \cdot H(x_{0})(x-x_{0}) / 2\] is called the quadratic approximation of \(f\). The kernel of \(Q-f(x_{0})\) is the quadratic manifold \[Q(x)-f(x_{0})=x \cdot B x+A x=0,\] where \(A=d f\) and \(B=d^{2} f / 2\). It approximates the surface \(\left\{x \mid f(x)-f\left(x_{0}\right)=0\right\}\) even better than the linear one. If \(\left|x-x_{0}\right|\) is of the order \(\epsilon\), then \(|f(x)-L(x)|\) is of the order \(\epsilon^{2}\) and \(|f(x)-Q(x)|\) is of the order \(\epsilon^{3}\). This follows from the exact Taylor with remainder formula.3

17.2.5 The Tangent Plane to a Surface

To get the tangent plane to a surface \(f(x)=C\) one can just look at the linear manifold \(L(x)=C\). However, there is a better method:

The tangent plane to a surface \(f(x, y, z)=C\) at \((x_{0}, y_{0}, z_{0})\) is \(a x+b y+c z=\) \(d\), where \([a, b, c]^{T}=\nabla f(x_{0}, y_{0}, z_{0})\) and \(d=a x_{0}+b y_{0}+c z_{0}\).

17.2.6 How Gradients Help Find Tangent Planes to Surfaces

This follows from the fundamental theorem of gradients:

Theorem 3. The gradient \(\nabla f(x_{0})\) of \(f: \mathbb{R}^{m} \rightarrow \mathbb{R}\) is perpendicular to the surface \(S=\left\{f(x)=f(x_{0})=C\right\}\) at \(x_{0}\).

Proof. Let \(r(t)\) be a curve on \(S\) with \(r(0)=x_{0}\). The chain rule assures \[d / d t f(r(t))=\nabla f(r(t)) \cdot r^{\prime}(t).\] But because \(f(r(t))=c\) is constant, this is zero assuring \(r^{\prime}(t)\) being perpendicular to the gradient. As this works for any curve, we are done. ◻

17.3 EXAMPLES

Example 1. Let \(f: \mathbb{R}^{2} \rightarrow \mathbb{R}\) be given as \(f(x, y)=x^{3} y^{2}+x+y^{3}\). What is the quadratic approximation at \((x_{0}, y_{0})=(1,1)\)? We have \(d f(1,1)=[4,5]\) and \[\begin{aligned} \nabla f(1,1)&=\left[\begin{array}{l} f_{x} \\ f_{y} \end{array}\right]=\left[\begin{array}{l} 4 \\ 5 \end{array}\right],\\ H(1,1)&=\left[\begin{array}{ll} f_{x x} & f_{x y} \\ f_{y x} & f_{y y} \end{array}\right]=\left[\begin{array}{ll} 6 & 6 \\ 6 & 8 \end{array}\right]. \end{aligned}\]

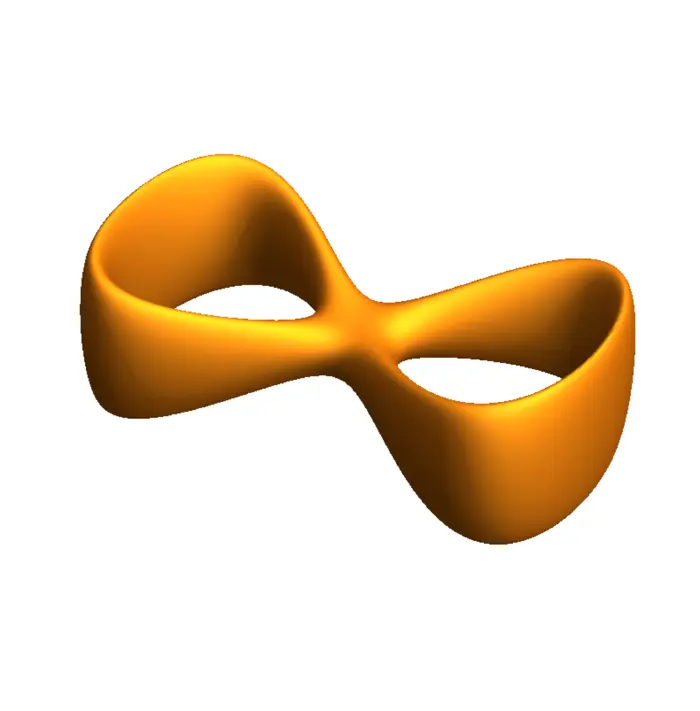

The linearization is \[L(x, y)=4(x-1)+5(y-1)+3.\] The quadratic approximation is \[Q(x, y)=3+4(x-1)+5(y-1)+6(x-1)^{2} / 2+12(x-1)(y-1) / 2+8(y-1)^{2} / 2.\] This is the situation displayed to the left in Figure (17.2). For \(v=[7,2]^{T}\), the directional derivative \[\begin{aligned} D_{v} f(1,1)&=\nabla f(1,1) \cdot v\\ &=[4,5]^{T} \cdot[7,2]=38. \end{aligned}\] The Taylor expansion given at the beginning is a finite series because \(f\) was a polynomial: \[\begin{aligned} f([1,1]+t[7,2])&=f(1+7 t, 1+2 t)\\ &=3+38 t+247 t^{2}+1023 t^{3}+1960 t^{4}+1372 t^{5}. \end{aligned}\]

Example 2. For \(f(x, y, z)=-x^{4}+x^{2}+y^{2}+z^{2}\), the gradient and Hessian are \[\begin{aligned} \nabla f(1,1,1)&=\left[\begin{array}{l} f_{x} \\ f_{y} \\ f_{z} \end{array}\right]=\left[\begin{array}{l} 2 \\ 2 \\ 2 \end{array}\right],\\ H(1,1,1)&=\left[\begin{array}{lll} f_{x x} & f_{x y} & f_{x z} \\ f_{y x} & f_{y y} & f_{y z} \\ f_{z x} & f_{z y} & f_{z z} \end{array}\right]=\left[\begin{array}{ccc} -10 & 0 & 0 \\ 0 & 2 & 0 \\ 0 & 0 & 2 \end{array}\right]. \end{aligned}\]

The linearization is \[L(x, y, z)=2-2(x-1)+2(y-1)+2(z-1).\] The quadratic approximation \[Q(x, y, z)=2-2(x-1)+2(y-1)+2(z-1)+\left(-10(x-1)^{2}+2(y-1)^{2}+2(z-1)^{2}\right) / 2\] is the situation displayed to the right in Figure (17.2).

Example 3. What is the tangent plane to the surface \(f(x, y, z)=1 / 10\) for \[\begin{aligned} f(x, y, z)&=10 z^{2}-x^{2}-y^{2}+100 x^{4}-200 x^{6}+100 x^{8}-200 x^{2} y^{2}+200 x^{4} y^{2}+100 y^{4}\\ &=1 / 10 \end{aligned}\] at the point \((x, y, z)=(0,0,1 / 10)\)? The gradient is \[\nabla f(0,0,1 / 10)=\left[\begin{array}{l}0 \\ 0 \\ 2\end{array}\right].\] The tangent plane equation is \(2 z=d\), where the constant \(d\) is obtained by plugging in the point. We end up with \(2 z=2 / 10\). The linearization is \(L(x, y, z)=1 / 20+2(z-1 / 10)\).

EXERCISES

Exercise 1. Let \(r(t)=[3 t+\cos (t), t+4 \sin (t)]^{T}\) be a curve and \[f\left([x, y]^{T}\right)=\left[x^{3}+y, x+2 y+y^{3}\right]^{T}\] be a coordinate change.

- Compute \(v=r^{\prime}(0)\) at \(t=0\), then \(d f(x, y)\) and \(A=d f(r(0))\) and \(d f(r(0)) r^{\prime}(0)=A v\).

- Compute \(R(t)=f(r(t))\) first, then find \(w=R^{\prime}(0)\). It should agree with a).

Exercise 2.

- The surface \[f(x, y, z)=x^{2}+\frac{y^{2}}{4}+\frac{z^{2}}{9}=4+1 / 4+1 / 9\] is an ellipsoid. Compute \(z_{x}(x, y)\) at the point \((x, y, z)=(2,1,1)\) using the implicit differentiation rule. (Use the formula).

- Apply the Newton step 3 times starting with \(x=2\) to solve the equation \(x^{2}-2=0\).

Exercise 3. Evaluate without technology the cube root of \(1002\) using quadratic approximation. Especially look how close you are to the real value.

Exercise 4.

- Find the tangent plane to the surface \(f(x, y, z)=\) \(\sqrt{x y z}=60\) at \((x, y, z)=(100,36,1)\).

- Estimate \(\sqrt{100.1 \cdot 36.1 \cdot 0.999}\) using linear approximation (compute \(L(x, y, z)\) rather than \(f(x, y, z)\).)

Exercise 5. Find the quadratic approximation \(Q(x, y)\) of \[f(x, y)=x^{3}+x^{2} y+x^{2}+y^{2}-2 x+3 x y\] at the point \((1,2)\) by computing the gradient vector \(\nabla f(1,2)\) and the Hessian matrix \(H(1,2)\). The vector \(\nabla f(1,2)\) is a \(1 \times 2\) matrix (row vector) and the Hessian matrix \(H(1,2)\) is a \(2 \times 2\) matrix.